52 changed files with 484 additions and 0 deletions

+ 55

- 0

api/FaceSwap/README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 70

- 0

api/FaceSwap/face_detection.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 238

- 0

api/FaceSwap/face_swap.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

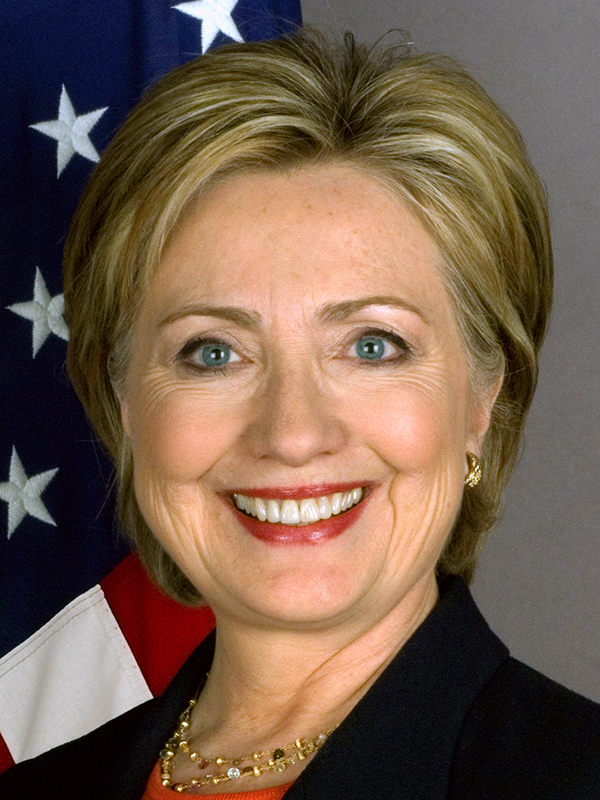

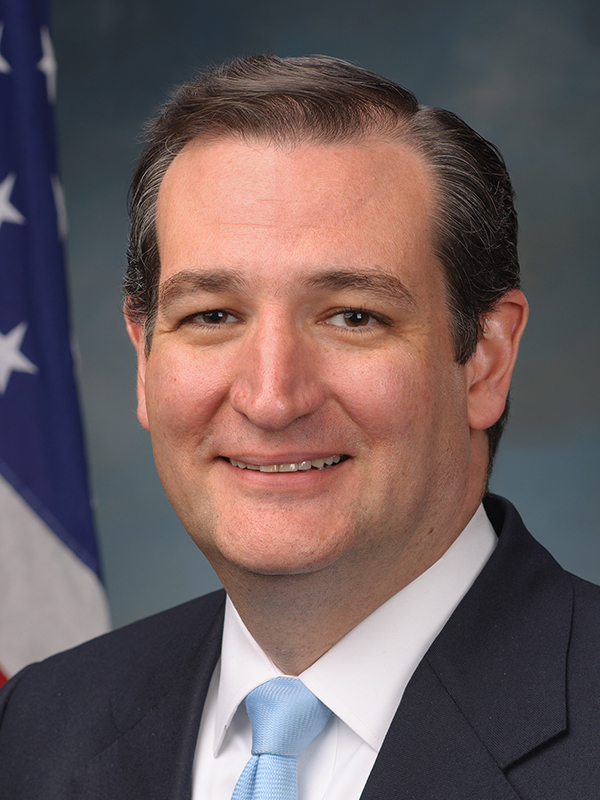

api/FaceSwap/imgs/face.png

BIN

api/FaceSwap/imgs/target.png

BIN

api/FaceSwap/imgs/test1.jpg

BIN

api/FaceSwap/imgs/test2.jpg

BIN

api/FaceSwap/imgs/test3.jpg

BIN

api/FaceSwap/imgs/test4.jpg

BIN

api/FaceSwap/imgs/test5.jpg

BIN

api/FaceSwap/imgs/test6.jpg

BIN

api/FaceSwap/imgs/test7.jpg

BIN

api/FaceSwap/imgs/test8.jpg

+ 12

- 0

api/FaceSwap/jared.txt

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 47

- 0

api/FaceSwap/main.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 58

- 0

api/FaceSwap/main_video.py

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

BIN

api/FaceSwap/models/shape_predictor_68_face_landmarks.dat

BIN

api/FaceSwap/nina_noGesture_adj.mp4

BIN

api/FaceSwap/ok.avi

+ 3

- 0

api/FaceSwap/requirements.txt

|

||

|

||

|

||

|

||

BIN

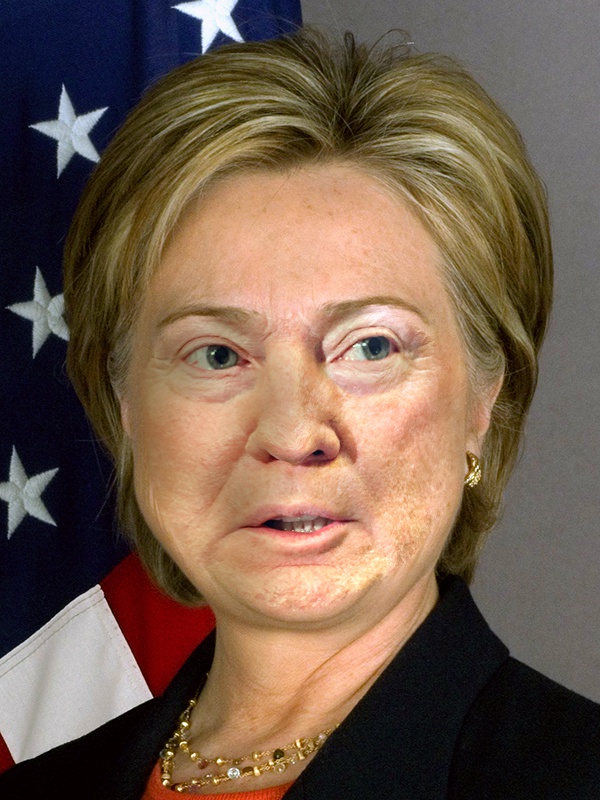

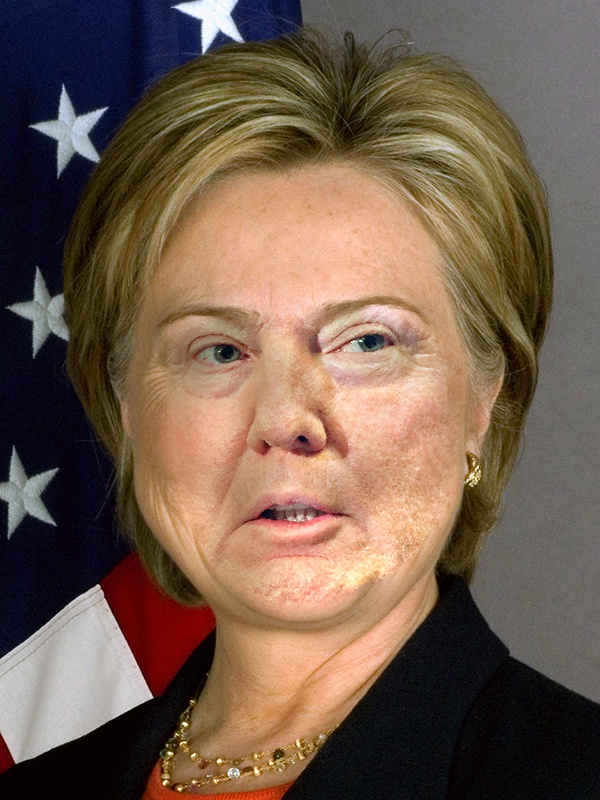

api/FaceSwap/results/output6_1.jpg

BIN

api/FaceSwap/results/output6_2_2d.jpg

BIN

api/FaceSwap/results/output6_3.jpg

BIN

api/FaceSwap/results/output6_4.jpg

BIN

api/FaceSwap/results/output6_7.jpg

BIN

api/FaceSwap/results/output6_7_2d.jpg

BIN

api/FaceSwap/results/output7_4.jpg

BIN

api/FaceSwap/results/warp_2d.jpg

BIN

api/FaceSwap/results/warp_3d.jpg

+ 1

- 0

api/FaceSwap/scripts/faceswap.sh

|

||

|

||

BIN

api/FaceSwap/src_img/16243455712576303.jpg

BIN

api/FaceSwap/src_img/16243457809067454.jpg

BIN

api/FaceSwap/src_img/16243459511696787.jpg

BIN

api/FaceSwap/src_img/16243467048560734.jpg

BIN

api/FaceSwap/src_img/16243470855158741.jpg

BIN

api/FaceSwap/src_img/16243482365798137.jpg

BIN

api/FaceSwap/src_img/1624348281687592.jpg

BIN

api/FaceSwap/src_img/1624349763441171.jpg

BIN

api/FaceSwap/src_img/16243498493934758.jpg

BIN

api/FaceSwap/src_img/16243509841846786.jpg

BIN

api/FaceSwap/src_img/1624350996556805.jpg

BIN

api/FaceSwap/src_img/162435100893648.jpg

BIN

api/FaceSwap/src_img/16243522235433133.jpg

BIN

api/FaceSwap/src_img/16243527314408908.jpg

BIN

api/FaceSwap/src_img/16243536374485693.jpg

BIN

api/FaceSwap/src_img/16243536586519773.jpg

BIN

api/FaceSwap/src_img/16243536800773168.jpg

BIN

api/FaceSwap/src_img/1624353831368393.jpg

BIN

api/FaceSwap/src_img/1624354063681436.jpg

BIN

api/FaceSwap/src_img/16243570235773947.jpg

BIN

api/FaceSwap/src_img/16243577875540392.jpg

BIN

api/FaceSwap/src_img/16243585956367.jpg