2 Commits fb4ff0d1ca ... c70d6c8b9d

| Author | Date |

|---|---|

|

|

1 year ago |

|

|

1 year ago |

8 changed files with 522 additions and 121 deletions

+ 9

- 2

RAG_app.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

BIN

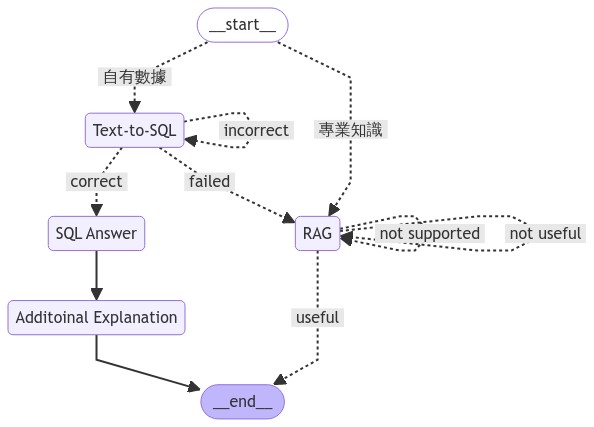

agent_workflow.png

File diff suppressed because it is too large

+ 244

- 94

ai_agent.ipynb

+ 65

- 25

ai_agent.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 0

- 0

file_loader/gsheet2neo4j.py

+ 100

- 0

post_processing_sqlparse.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 84

- 0

systex_app.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

+ 20

- 0

text_to_sql.py

|

|||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||

|

|

||